Wed, 13 Mar 2013 18:00:00 GMT

Mon, 31 Dec 2012 23:00:07 GMT

So. Ich habe mir überlegt, mal wieder Prognosen zu machen, nachdem ich jetzt mal ein Jahr ausgelassen habe. Alles bestenfalls "nur so ein Gefühl", teils Befürchtungen, teils Wunschdenken. Traditionell in Deutsch, diesmal gruppiert:

- Politik:

- Die Bundesregierung wird bis September zusammenhalten, dann wird Bundestagswahl sein.

- FDP und Piraten schaffen die 5%-Hürde in der Bundestagswahl knapp nicht.

- Eine große Koalition wird sich bilden, Angela Merkel bleibt Bundeskanzlerin.

- Das NPD-Verbotsverfahren wird vom Gericht abgelehnt. Daraufhin wird bekräftigt, wieder vermehrt V-Männer einzusetzen.

- Die innereuropäische Fremdenfeindlichkeit wird aufgrund der Finanzkrise und der daraus resultierenden Probleme zunehmen.

- Genitalverstümmelung von männlichen Kindern aus religiösen Gründen wird per Gesetz erlaubt werden.

- Nachdem weitere Experimente mit Satelliten von Nordkorea unternommen werden, wird dieses von den USA angegriffen. Die Gegenwehr ist, dank Einwirken Südkoreas, gering.

- Kunst und Populärkultur:

- Der neue Star Trek-Film "Into Darkness" wird verspätet in die Kinos kommen, wird scharf kritisiert werden, wird aber ein Erfolg.

- Der neue Zelda-Teil für die Wii U wird Ende des Jahres mit Titel angekündigt.

- Ein offizieller neuer Portal-Teil wird angekündigt.

- Software und Technik:

- Windows 8 wird ein Erfolg.

- Das iPod 6 wird angekündigt.

- Wheezy wird stable.

- Das Projekt Boot To Gecko wird eingestellt. Die Entwickler konzentrieren sich mehr auf die Integration von Webdiensten in Smartphones.

- Diverse Compiler werden ankündigen, dass sie den C++11-Standard nun stabil implementieren.

- Nachdem sich weder ZFS noch BTRFS unter Linux so richtig durchsetzen, wird ein EXT5 angekündigt.

- Wissenschaft:

- P ungleich NP wird bewiesen werden, ein Fehler wird nicht gefunden, es wird diverse Bestrebungen geben, den Beweis für einen formalen Beweisprüfer zu schreiben.

- Es wird einen Durchbruch in der Erforschung superluminarer Effekte geben, der zwar von Überlichtflügen noch weit entfernt ist, aber trotzdem hoffen lässt und von der Öffentlichkeit halbwegs wahrgenommen wird.

- Es wird gezeigt werden, dass die Collatz-Vermutung ein großes Kardinalzahlaxiom impliziert.

- Sonstiges:

- Die Welt wird nicht untergehen.

- Wie jedes Jahr wird das Wetter auch dieses Jahr wieder irgendwie extrem sein.

- Wie jedes Jahr wird auch dieses Jahr wieder irgendeine Seuche um sich greifen, höchstwahrscheinlich im Sommerloch.

Sat, 08 Dec 2012 22:48:03 GMT

I will use some results from Transfinite Chomp by S. Huddleston and J. Shurman which I found on this excellent post from Xor's hammer.

Also keep in mind this is not a scientific paper, I will not always put footnotes everywhere I use information from the named paper. Especially, I change the notation so it will (hopefully) be compatible with ZFC (that is, there is no set of ordinal Numbers, etc.), as I think I have seen proofs in that paper that are not correct because of this (but I might be mistaken).

1 Finite Chomp

Before understanding ordinal chomp, it is useful to know finite chomp, and what makes it so interesting. We will try to give a definition that is less "economic" than the usual ones, but much more compatible with the intuition of a chocolate bar, which is the usual metaphor for explaining chomp.

Keep in mind that these definitions are preliminary and will later be generalized.

Definition: As a slight abuse of notation, we identify every integer with the set of its preceding numbers. So

Definition: A

Lemma: Every sequence of legal moves is finite.

Proof: Trivially, the number of preimages of

Definition: Let

- every branch of the tree ends in the final chocolate bar (that is, every branch is a whole game)

- the final chocolate bar in every branch is a player

chocolate bar (that is, player

wins)

- every player

node in the subtree is adjacent to all the player n chocolate bars that are adjacent in the original tree (that is, the winning of player n does not depend on the choices of player

We distinguish between weak and strong existence and disjunction. Usually, this is done to get a finer distinction between constructive and non-constructive propositions. However, we do not want to get too deep into that topic. So we give an informal definition.

Informal Definition: The weak disjunction between two propositions

and

is a disjunction which we proved without giving a possibility of deciding which one of these actually holds inside the proof. Strong disjunction

always comes with a theoretical possibility of decision directly inside the proof. The weak existence

holds if only the existence of an object is proved, but there is no way of calculating it giving imlicitly with the proof. With strong existence

comes such a way.

The distinction between those two kinds of existence and disjunction can be seen as one main difference between constructive and classical mathematics. While in constructive theories, one can set , in non-constructive mathematics, this is equivalent to usual disjunction.

Lemma: For every

Proof: Player 1 has to use

Lemma: For every chocolate bar

Proof: Note first that the "or" is exclusive here: Assume both existed, then consider a strategy for player 1. It will have some edge from

On the other hand, assume there is no strategy for player 1 or player 2. Then there must be some move player 1 can make, such that player 2 still has no winning strategy after this move. Player 2 can answer with a move after which player 1 again has no winning strategy, and so on. This is a contradiction, as every game has finite length.

Theorem: For every

Proof: Assume player 2 had a strategy

So we have an explicit winning strategy for finite quadratic chocolate bars. Unfortunately, even though we know we can always win, we have no winning strategy except bruteforcing for the rest of the cases (except for some additional special cases like our above Lemma).

Short introduction to ordinal numbers

Definition: A (strict) well-ordering is a (strict) total ordering in which every set has a minimum. A transitive set is a set of which every element is also a subset. An ordinal (number) is a transitive set which is strictly well-ordered by the

Here are some facts about ordinals which I will not prove:

- The above notation

is consistent with them.

- Every well-ordering on a set is isomorphic to some unique ordinal.

- Every decreasing sequence of ordinals is finite.

- The collection of ordinals

forms a proper class. It is not a set.

- Every ordinal

has a successor

.

- Some ordinals also have a predecessor. If

has no predecessor, we say that

is a limit ordinal, writing

.

- The smallest limit ordinal is called

, it is the set of finite (natural) numbers.

Definition: A cardinal is an ordinal which satisfies

.

We will only need cardinals in one small argument, in which we just need some "upper bound" for stuff we want to do.

A transfinite chocolate bar is defined similarily to the finite ones: An

We first prove a few generalizations for properties of finite chomp.

Lemma: The sequence

Proof: By definition of the application and

Theorem: Every sequence

Proof: By the Lemma, we know that the sequences

Lemma: For every chocolate bar

Proof: The above proof only requires every game to be of finite length, so it also applies here.

Lemma: Player 1 has a winning strategy for

Proof: The strategy is the same as for the finite case, but we have no parity here. However, it is easy to see that after every player 2 move, player 1 recovers a quadratic state, from which he has a winning move again.

Theorem: If

Proof: The argument is exactly the same as for the finite case.

One interesting part about transfinite chomp is that in some cases it is possible that the second player has a winning strategy.

Theorem: Let

Proof: Firstly we show uniqueness. Assuming the existence of

- For

, we use induction on

. If

,

,

. Otherwise, we assume that for all legal chocolate bars

with

, there already exists a

with the property. Especially then, for all legal chocolate bars

that can be reached from

by one move, there exist

. Then

is the solution: Trivially, every move player 1 makes leads to a state which player 2 can now win. Notice that if

was our previous cardinal,

is sufficient for everything we do (I am too lazy now to reason why

should be sufficient).

- For every other

, we may assume that for every pair of

and a legal chocolate bar

some

already exists. Let

be the

to the power of the supremum of all previous cardinals. Furthermore, for every legal chocolate bar

which can be reached from

for some

by a move

with

, we also already have a

. Then the

of all these exists (since the cardinality is bounded), and its own cardinality is bounded, and it trivially satisfies what we want.

Proof: For

Fri, 07 Dec 2012 23:00:07 GMT

The first right you usually get is to vote. What would Common Lisp vote? Politics is complicated, and there is so much to choose, while Common Lisp does not like choosing, rather supporting everything. Not sure what it would vote. It is probably compatible with everything.

Furthermore, at that age you will be sui juris. You can do everything, but you are also responsible for everything you do. Common Lisp has its own ways of dealing with things, some of them are still unique, and many of them were unique for a long time. Meanwhile, there evolved other, younger languages like Ruby with similar concepts, which are more widespread. Common Lisp pays the prize of being beyond its time, at a time when Windows 95 was new.

Also, the society's duty for your protection rapidly decreases. Not only are you responsible for everything you do, but also, there are less people willing to encourage you in continuing to find yourself - you have had enough time for that. The people begin to demand you to actually achieve valuable things, that is, either things that are economically or socially valuable, or things that show that you will become economically or socially valuable. I think that Common Lisp has done this already: It provided expierience with concepts that combined academical and practical principles of programming. Maybe its glorious days are gone, but it is still an interesting language to use and to learn from.

In Germany, you have to pay fees every quarter of a year you go to a doctor. That is, when you have a medical problem, you lose additional money. Well, it seems like Common Lisp does not really use any health insurance anyway. There are several little diseases Common Lisp has, but for which there was never enough money to heal them. I am afraid that there is and will not be enough money to heal these diseases. But hopefully, the usual practices and implementations will have a self-healing effect.

Also, you can have a drivers' license when you are 18. I am not sure how successful Common Lisp is at driving cars. But as far as I heard, it has lost much of its importance for AI and robotics. I am not sure whether Common Lisp will ever drive a car. But if so, it would be awesome!

Wed, 28 Nov 2012 04:39:49 GMT

The really big topic was definitely the internet, and the many new gadgets that evolved. So much seems to have changed. While back in my day™ - which is not that long ago yet - the greatest technical threat for the teachers' precious blabber were alarms of digital watches, it seems to be considered normal today that pupils are using their laptops, tablets and smartphones.

However, while the prognosis about the big topic turned out to be wrong, many prognoses about the internet did not. One of my teachers compared the weird situation of everybody being able to publish everything and the difficulties of checking it to the "Wild West", which may be regulated someday. Another one claimed that education will focus more on teaching how to get information required for some task, rather than building a huge collection of knowledge. Wikipedia puts lots of efforts into the quality of its source material, and people learned to distinguish between claims and quotes (which is, for example, a claim, since I have no evidence for this).

But since then, journalists are also questioned more, because people learned that not everything a journalist sais must be correct, journalists are no longer the masters of information anymore, and this takes a lot of possibilities for regulation away from the state.

And now, we have Anonymous, we have DuckDuckGo advertising for VanishingRights.com, we have an Internet Defense League, we have projects like Tor and Freenet, and even Google requests "Verteidige dein Netz" (defend your net).

Maybe in 50 years the people will wonder whether we had no other problems. Or they will look back and wish to have lived in that glorious time. We cannot know what actually happens. The internet is different to what people imagined 20 years ago, there is much more chaos than one would have expected. On the other hand, there are much more possibilities than one would have expected, like people with smartphones who are online virtually always, and cheap real-time-communication to huge parts of the world with only a little gadget.

One may argue that the internet on the whole is a bad thing. However, it is there, and it will probably stay there, and it has already influenced most of us somehow. We have something new with which we have no experience. These times are weird.

Sat, 13 Oct 2012 15:44:07 GMT

Inroduction

should be a field:

,

,

,

,

- There exist additive and multiplicative neutral elements

and

, such that

- There exists an additive inverse

for every

, such that

. There exists a multiplicative inverse

for every

such that

on

should be a linear partial ordering relation:

and

implies

and

implies

should be an ordered field regarding

:

implies

and

implies

is complete over

:

- If

for all

, then there exists a maximal

with

for all

.

- If

for all

, then there exists a minimal

with

for all

.

It can be shown that these axioms are sufficient to classify a unique structure "up to isomorphy", that is, two such structures are equivalent up to "renaming" of the elements. However, this just says that if such a structure exists, it is unique. It does not show that it actually exists, which is what we will approach.

Equivalence Relations

Equivalence relations are an extremely important concept in mathematics. They show up in almost every mathematical topic, like logic, algebra, topology. It is important to understand this concept to define

An eqivalence relation is a binary relation that usually somehow resembles similarities between objects. Formally, a relation

- Reflexivity: For all

we have

- Symmetry: For all

we have

- Transitivity: For all

if we have

and

then we also have

.

For every we can define its equivalence class

.

Lemma: Two equivalence classes and

are either equal or disjoint.

Proof: Every equivalence class contains at least one element, so they cannot be both disjoint and equal. Assuming they are not disjoint, they must have a common element , but then

and

, so by transitivity,

, hence

.∎

Hence, the set of equivalence classes over regarding

is a partition of

. We call this set

, say "S modulo

".

Trivial examples are standard equality , in which case

can be identified with

in a canonical way, and the "squash"-relation that makes every two elements equal, in which case there is only one equivalence class.

Furthermore, important equivalence classes are the residue-equalities on

, where

means that

is divisible by

. Their equivalence classes are the residue classes, and they can be added and multiplied elementwise, forming the residue class rings.

Natural Numbers

Our bootstrapping of begins with a slightly simpler object,

. First, we give axioms for something we shall call a Peano Structure:

A triple , where

is a set,

and

is a function, is called a Peano Structure, if

- There is no

with

- For every

,

- For every

, if

, then

- If

,

and if

, then

That is called zero, and

is called successor function of that peano structure.

Mathematicians call a function between two structures that preserves relevant parts of that structure an isomorphism. In case of Peano Structures, a function between two Peano Structures

is an isomorphism if

is bijective

- For all

we have

, and for all

we have

.

If such an isomorphism exists between two Peano Structures, they are called isomorphic. They are then essentially equal structures, in the sense that there is no mathematically meaningful difference between them, and the one structure is a result of just renaming the elements of the other.

Lemma: Every two peano structures are isomorphic (in one set universe)

Proof: Let two peano structures be given. We define a function

recursively by

. Therefore, the second and a part of the third axiom are already satisfied by definition.

We show that is surjective: We know that the image of

is a subset of

, and we know that

is in this image. If

is in the image of

, say,

for some

, then we know that

by definition, hence,

is also in the image of

. By the axioms of peano structures, this means that the image of

is

.

Now consider the function with

. By the same argument as above,

is surjective. Furthermore, since we have

and from

follows

we have

for all

, and similarily

for all

, hence,

. Therefore,

is also injective, and the third axiom of isomorphism is proved.∎

What we now know is that if there is a peano structure, then there is - up to isomorphism - only one. There are several ways to show that (in a set universe) there actually is one. The common way is to classify a special set of finite sets, the finite cardinal numbers. In ZFC, this can be done using the well-ordering theorem, the axiom of infinity and the axiom of comprehension. But doing this is outside our scope, we just believe the following theorem:

Theorem: A peano structure exists (in every set universe).

And now we can take an arbitrary peano structure as our set of natural numbers, , by setting

and

[note that we have yet to define addition, this is just for intuition]. The first three axioms of peano structures become obvious arithmetical laws, the fourth axiom becomes the induction axiom. We can define addition recursively by

.

Exercise: Prove

We can define multiplication recursively by

The arithmetical laws follow from these definitions.

Integers and Rational Numbers

Now that we have defined , we want to reach out for

, and it turns out that this is much easier than getting

in the first place. However, for a non-mathematician, these definitions sound rather overcomplicated, but it turns out that they are much easier to handle than "less complicated" ones.

The need for negative numbers comes from the fact that subtraction is not total on , that is, for example,

. Subtraction itself is just the operation of solving an additive equation,

in our example. To be consistent with most of our arithmetical laws so far, it is desirable that the solution of

is equal to the solution of

and generally

, due to cancellation.

We observe that the equations and

must have the same solutions in

if

. Now, let us have a look at the set

of pairs of natural numbers. If we define

, then

is an equivalence relation on

: Reflexivity and symmetry are obvious. For transitivity, consider

and

. That is,

and

. Since we have equality of sums here, we may subtract without getting negative results, therefore

, so

and hence

, which means

. Now we define

.

We furthermore define . We have to show that this is well-defined, which is left as an exercise.

Now, at schools you are taught that , and even in higher mathematics this is often used. With our definition, this is of course not the case, but we can identify every natural number

with the equivalence class

. As isomorphic structures do not need to be distinguished, we can safely redefine

to be the set of these equivalence classes. The changes we would have to accomplish are below the usual abstraction level.

The structure with addition and multiplication is called a Ring.

Rational numbers are similar, this time we add solutions to , but we have to avoid the pathological case of

. The reason is that we want the rationals to be a Field. Similar to how we did before, we define an equivalence relation

on

, namely

. You are probably familiar with this representation of rational numbers, you just write

instead of

- we just chose an equivalence that tells when two fractions are equal, and we have

.

Cauchy Sequences

The step from rational numbers to real numbers is a bit more complicated. It is not sufficient to add solutions of special equations to our system. However, of course, in many cases, the real numbers are just solutions of equations. If we add zeroes of all polynomials, for example, we would get (more than) the algebraic real numbers over . But there are also transcendental numbers like

.

Let us first have a look at one of the first irrational numbers ever discovered: .

Theorem: There is no rational solution to the equation .

Proof: By monotony of we know that a positive solution must lie between

and

, and can especially not be integral. Now assume there was some solution

with

, where w.l.o.g.

. Then also

. But

, and as

cannot be factorized nontrivially,

must be divisible by

, therefore,

for some

. But then

yields

and by the same argument as before, we get that

is divisible by

, which contradicts our assumption

.∎

So we know that , but still, we can define the set

, and this set will be an open interval, with rational numbers approaching

up to an arbitrary precision. The set

is bounded, but has no rational bound. The set

is therefore not complete, we say.

However, for every given we can give a rational number

such that

. We now touch the topic of infinitesimal analysis, however, do not fear, we do not have to dive in it too deeply. The crucial concept we have to define now is the Cauchy sequence:

Definition: A sequence of rational numbers is called a Cauchy sequence, if for every

we can find an

such that for all

we have

.

That is, if we have such an , we know an

such that all elements of that sequence above

do not differ about more than

, and since we may choose this

arbitrarily small, the sequence "approximates" something.

For some of these sequences, we can give a rational number, which they "obviously" approximate. For example, somehow, the sequence "approximates"

: It gets arbitrarily close to

. On the other hand, if we set

, this is a cauchy sequence, but does not approximate any rational number. However, we want this to be a number - in general, we want things to which we have an intuitive arbitrarily precise "approximation" to be numbers - we want the numbers to be complete.

The easiest way to make sure this is the case is to just postulate that every Cauchy sequence has such an approximation (a "limit point"), but then the problem remains that we do not really know how the objects we add should look like. So we simply do the first thing that comes to your mind when you are a mathematician: Just use the Cauchy sequences themselves, and define a proper equivalence relation on them.

To do this, we first have to make a formal definition of what we mean by an "approximation", but we can give it for rational approximated numbers only, so far:

Definition: A sequence converges towards

, if for an arbitrarily small

we can find an

such that for all

we have

.

As an exercise, check whether our above statements on "approximations" hold for this definition. Now we define an equivalence relation on cauchy sequences:

Definition: Two cauchy sequences and

converge towards the same point, denoted by

, if the sequence

converges towards

.

Theorem: is an equivalence relation.

Proof: Reflexivity holds trivially, since . Symmetry is trivial since

. For transitivity, assume

and

. Now let an

be given. By definition, we find an

such that for all

we have

, and similarily, we find an

such that for all

we have

. But then we have

for all

. Therefore,

.∎

Let be the set of Cauchy sequences, then we define

. We can embed the rational numbers into the real numbers by

. Furthermore, we define addition componentwise by

same for multiplication. The simple arithmetic laws then also follow componentwise. For and

, we say that they hold when they hold for infinitely many corresponding elements of the compared sequences.

Of course, we still have to show a lot of things, like showing that this is really a field with the desired axioms, and showing that addition and multiplication defined in this way are compatible with the equivalence relation. However, this is just technical, and we omit it, since we think that for people not familiar with this topic, it is already sufficiently complicated.

Prospects

For everyone who does not yet feel challenged enough, I have a few prospects. The first one is from set theory over the real line:

Hypothesis (Suslin): Let be a set and

satisfy the following conditions:

is a linear ordering:

,

,

.

is dense: If

and

, then there is a

such that

.

is complete: If for some

there exists an

such that all

are

("

is bounded"), then there exists a smallest

with this property ("

is the supremum of

in

).

- Define intervals in the canonical way by

. Then for every set

of pairwise disjoint intervals, there exists a surjective function

("every set of pairwise disjoint intervals is countable").

Then is isomorphic to

.

Theorem: Suslin's Hypothesis is undecidable over standard set theory (ZFC).

Of course, we will not prove this, as it involves a lot of model theory. Let us move on with our second prospect, the p-adic numbers. If we look at the proof that is an equivalence relation, we notice that what we really needed to prove this were a few inequalities on the absolute value, namely:

The third one is called the triangle inequality. It is crucial for the transitivity proof. On the other hand, we might think of some other function which satisfies the same axioms. And in fact, there is a mathematical concept which generalizes this:

Definition: A function is called a metric (or distance function) on

if it satisfies

Note that this definition is usually made with real numbers instead of rational numbers.

Now, as above, we can define for a given metric

, and it will also be an equivalence relation.

Now, let be some prime number. For every rational number

, we can find an unique integer

, such that

, where

, and neither

nor

are divisible by

. Define

for every such

, and

. Define

.

Lemma:

Proof: Let and

with fully cancelled fractions not containing

as a factor, and let w.l.o.g.

. Then

. Now,

is not divisible by

, so the fraction can only contain positive powers of

. Therefore,

for some

. We now want

, that is,

, which is trivial, since

.∎

Theorem: is a metric.

Proof: Trivially, and

. It remains to show the triangle inequality, that is,

. By the above lemma, we have

.∎

The set we get when identifying sequences according to this metric is called , the

-adic numbers. It has many similarities with

, and you can even do analysis on it.

Sun, 30 Sep 2012 23:00:00 GMT

War es mir ja sofort klar

Ich liebe dieses lust'ge Ding

Das dort in meinem Grase hing

Ich liebe den aparten Duft

Zu riechen in der Gartenluft

Zu sehen wie der braune Teig

Sich weiß verfärbet mit der Zeit

Es ging dann später mit mir aus

Doch aus dem Cafe flog ich raus

Welch armes kleines braunes Ding

Das da in meinem Grase hing

Keiner mag das Dingchen sehen

Sollte es auch mit mir gehen

Alle Leute geh'n von Dann'

Nur die Hunde kommen ran

Ich liebe dieses lust'ge Ding

Das dort in meinem Grase hing

Ich gehe morgen Blumen kaufen

Ich liebe einen Scheißhaufen

Sun, 30 Sep 2012 12:35:00 GMT

Will the following code compile?

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

NAT* doWitnessing () {

return (C*)(0xbad);

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<ZERO> > > ());

}

Well, probably. But what about

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

NAT* doWitnessing () {

return (C*)(0xbad);

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<FAIL> > > ());

}

Well, hopefully not, will it? Hm. What about

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

NAT* doWitnessing () {

return new C;

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<FAIL> > > ());

}

Probably not. So how about

#include <cstdlib>

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

NAT* doWitnessing () {

return (C*) random();

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<ZERO> > > ());

}

Of course it compiles. Why shouldn't it. And so will

#include <cstdlib>

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

NAT* doWitnessing () {

return (C*) random();

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<FAIL> > > ());

}

maybe. Maybe not. How about

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

void doWitnessing () {

if (false) NAT a = C();

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<FAIL> > > ());

}

and furthermore, what about

class NAT {};

class ZERO : public NAT {};

class FAIL {};

template <class C>

class SUCC : public NAT {

public:

void doWitnessing () {

if (false) NAT a = C();

}

SUCC () {

doWitnessing();

}

};

int main (void) {

(SUCC<SUCC<SUCC<ZERO> > > ());

}

Got that?

Wed, 22 Aug 2012 23:21:41 GMT

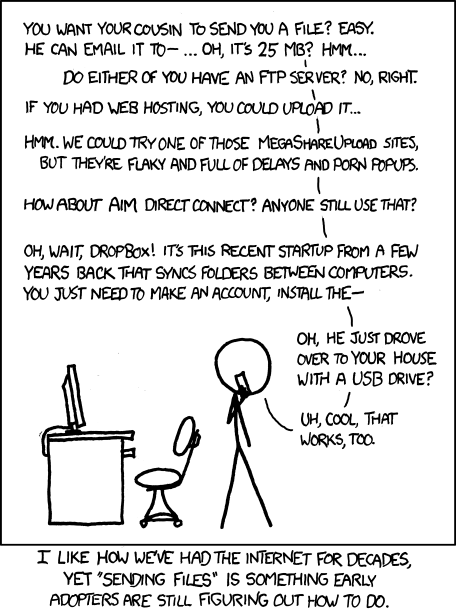

Meanwhile, with the rise of VoIP, this problem is solved in most cases. Routers can be configured to support UPnP, for example. The main problem is that there are plenty of people not knowing how to use tools like, say, scp. And many people are not willing to use any program that is not already installed on their system. They will try to use filehosters, and if you insist on security, they will compress the whole stuff into an encrypted RAR archive - if they have RAR.

So there is a certain motivation to have at least an own upload server - at least you can (mostly) rely on the person having some sort of webbrowser. Of course, there are plenty of PHP Upload Scripts out there. However, the other person might have a slow internet connection, and the file may be several gigabytes large. Which is mostly out of the bounds of your usual Apache and PHP configuration. And changing this configuration can be a real pain, as relevant configuration options are scattered all over the system, and you are likely to miss one and make the upload fail - and you may not want to configure such an upload site just for the casual use.

So, my approach was to use Clisp with Hunchentoot. Clisp is small, and Hunchentoot is simple but powerfull and gives me full control. I installed the necessary packages with Quicklisp, and produced the following code:

(ql:quickload :cl-base64)

(ql:quickload :hunchentoot)

(hunchentoot:define-easy-handler (index :uri "/index") ()

"<form enctype=\"multipart/form-data\" action=\"upload\" method=\"POST\">

Please choose a file: <input name=\"uploaded\" type=\"file\" /><br />

<input type=\"submit\" value=\"Upload\" />

</form>")

(hunchentoot:define-easy-handler (upload :uri "/upload") (uploaded)

(rename-file (car uploaded)

(concatenate 'string "/tmp/"

(cl-base64:string-to-base64-string (cadr uploaded))))

"SUCCESS")

(hunchentoot:start (make-instance 'hunchentoot:easy-acceptor :port 8000))

The uploaded file will be moved to /tmp/, and be named as the base64-representation of the name given by the client (to prevent any exploits). As you may notice, this is a script that is not made for "production use". I can just run it when I need it, let it run until the file is uploaded, and then stop it again. This is exactly what I need.

Well, one possible extension would be to add UPnP-Support, so I do not have to manually forward my router's port to use it.

Update: Notice that you should set LANG=C or something similar, otherwise binary data might be re-encoded.

Mon, 06 Aug 2012 12:30:00 GMT

The Linux desktop seems to be "stuck" somehow. The systems get more and more complicated, having a lot more little tweaks popping out of edges when pressing keys, but the usability does not increase in any way. That is, you can get used to the desktop environments, but especially advanced users want to adapt the computer to their needs rather than the other way around.

I think there is a simple fact that we just have to admit: The Linux desktop is good as it is.

No matter how many tweaks you add, the basic design has not changed and just leaves a few alternatives which are commonly used. There are some niche-products like tabbed and tiling window managers, there are the usual window managers with a task bar, there are docks, there is stuff like a common main menu for all applications. There are a few tweaks like key combinations that activate some other bars to appear. And except a few newer graphic tweaks (like window-previews in the taskbar or mac's expose) that need GPU acceleration, everything has been there for years. And still, the old Windows 98 Desktop default configuration is much better for both beginners and advanced users than much of the new stuff, and even the design may not be the most beautiful, but is acceptable.

Of course, a desktop environment does not only consist of the window management. But the same goes with file managers and editors: There are several approaches for having an UI, but all of them have been there for years. There is probably always some space left for innovation, but at least currently I do not see any.

Therefore, it seems like all this blabber about new versions of desktop environments is mainly about the recombination of already existing concepts, and about design. I would appreciate if the responsible people would concentrate more on making the software stable than making it new. In that sense, I like projects like Trinity very much - but of course, for a programmer, it is more prestigious to create something entirely new than just keeping the old stuff stable.

So here is a suggestion for something really "new": As I pointed out, I think all desktop programming is mainly about recombination of existing features. There is a lot of expierience with these features, and it applies to most operating systems. So why not use this knowledge to build a "meta-framework" for defining how features can be combined, platform-independently? What I am thinking of is some simple scriptable API with which one can program and configure his own environment. For example, I could think of something that bases on HTML and CSS with some extensions that allow to include program windows, but without having to care about how exactly the graphic backend works. Where you can include program windows into your DOM-tree by a special element, like you would do with images and stuff. HTML and CSS, because these are formats that have been here for years, are well-tested, well-supported and well-maintained, but of course, any other similar format would do as well.

Such a thing is definitely not trivial to implement, but I think it should be possible, and it would be nice.

And it would be better than bloating up existing environments.